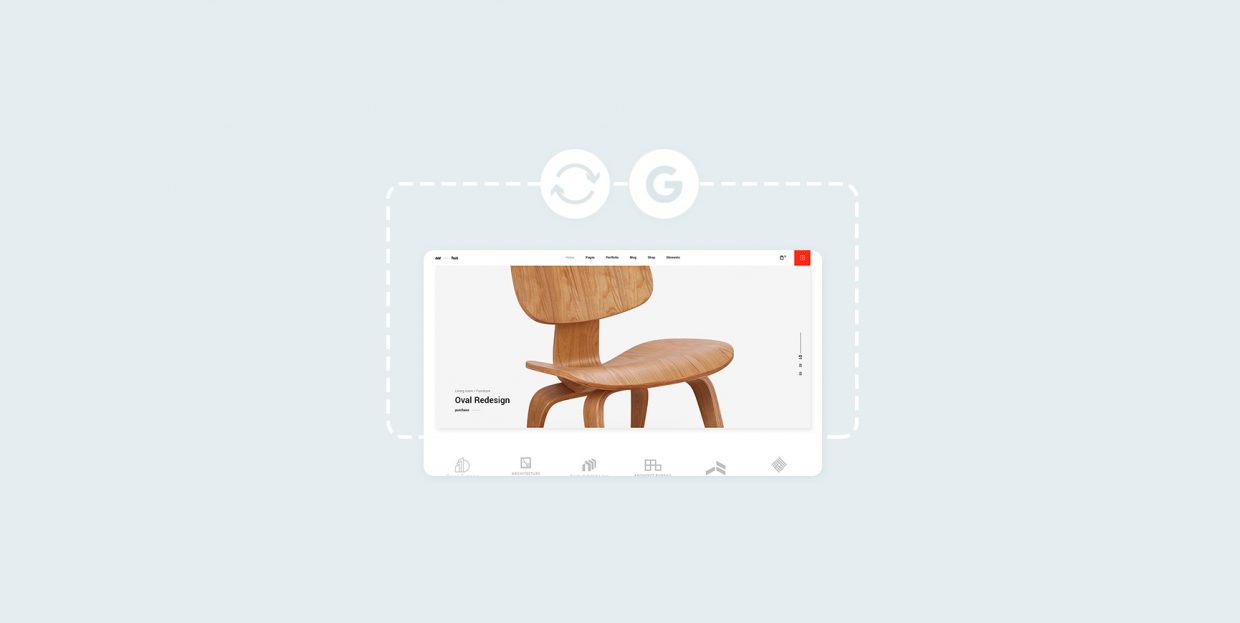

How to Tell Google to Recrawl Your WordPress Site

Google is incredibly good at doing a lot of stuff we find next to necessary for running our daily lives. It helps us find information. It helps us find the nearest store with that thing we wanted. It helps us get from point A to point B with as little fuss as possible.

Among all the things Google the search engine does well, one stands out – crawling a website. Google’s crawlers are tremendously good at what they do, indexing your website so that it can deliver it in search results.

But occasionally, you might ask Google to recrawl your website – go over a single page or the whole website one more time. If that’s what you want to do, we’ll teach you how to do it. In this article, you’ll learn:

Web crawling is the process in which a web spider – also called a spider bot – discovers and checks out the pages on your website. Web crawling is an important part of a search engine’s job, as it’s done with other key activities that make your website appear in search results.

Typically, a search engine such as Google will employ crawlers to “read” the pages it might index – gather and store data from them. This lets them serve those pages as search results when someone does a Google search, and the engine’s algorithms believe that your website might have a valid reply to their query.

In practice, we see this happening every day when you go to Google and type in whatever you’re interested in at that particular moment. The results pages get filled with possible answers, based on how Google’s algorithm graded them but powered by the search engine’s ability to crawl and index the information on your website.

The crawling process starts when Google gets the address of your page. This can happen in a couple of ways – Google can, for example, find a link to it on another website. Your managed hosting service provider might signal Google too, and you could do it yourself, by providing a sitemap – which we’ll come back to later.

The search engine dispatches its crawlers which find and analyze the contents and code of the page. Next, it will index the page unless there’s something preventing it from doing so, and bring it up when someone searches for it online. It’s almost as simple as that.

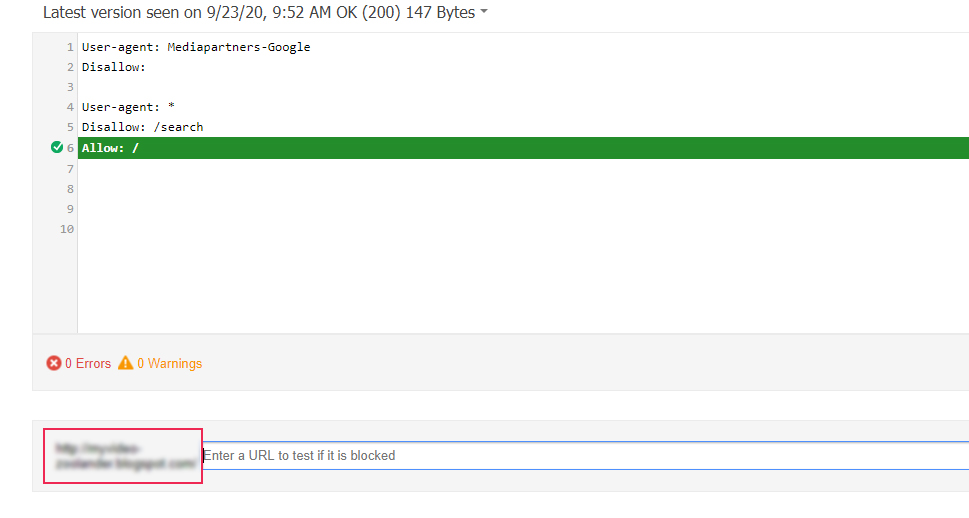

Crawling, just like indexing, is a process with some nuance to it. You can, for example, choose to opt-out of the process and tell Google that it shouldn’t crawl your website. You can also point towards certain pages you don’t want Google to crawl. You can even prevent Google from crawling resources such as images or media files. All of this is managed by creating a robots.txt file and placing it at the root of your site.

However, telling Google not to crawl a page won’t prevent it from appearing in search results. If Google’s spider finds your page through a link on a third-party website, it might still index it and have a version of it appear in search results. If you want certain pages to not be available in Google search results – admin login pages, for example. or thank-you pages – you should use the noindex metatag on it.

The main reason why people ask Google to recrawl their website is that they’ve made some changes to the pages, and they want Google to reflect them as soon as possible. Asking for a website-wide recrawl is common after a significant content audit. You can also ask for it after a rebranding, or even if you switched from a, say, blog WordPress theme to a magazine WordPress theme.

As for single-page requests, you might want to use them when publishing time-sensitive content and you want to be the first one with it in the search results. It’s also common to ask for a single-page recrawl after a significant or extensive change to a page’s content.

First things first. Make sure that you’re not blocking any pages you want crawled with a robots.txt file. Search engines have robots.txt tester tools that can help you figure out whether a page is protected against crawling or not. You can find these tools in the Search Console for Google. If there are no obstacles there, you can go to the next step.

There are two methods you can ask Google to recrawl your website. Keep in mind, though, that in either case you can end up waiting for a while before the crawler does its job and your pages are indexed and ready to appear in search results. Submitting multiple requests will not help with that.

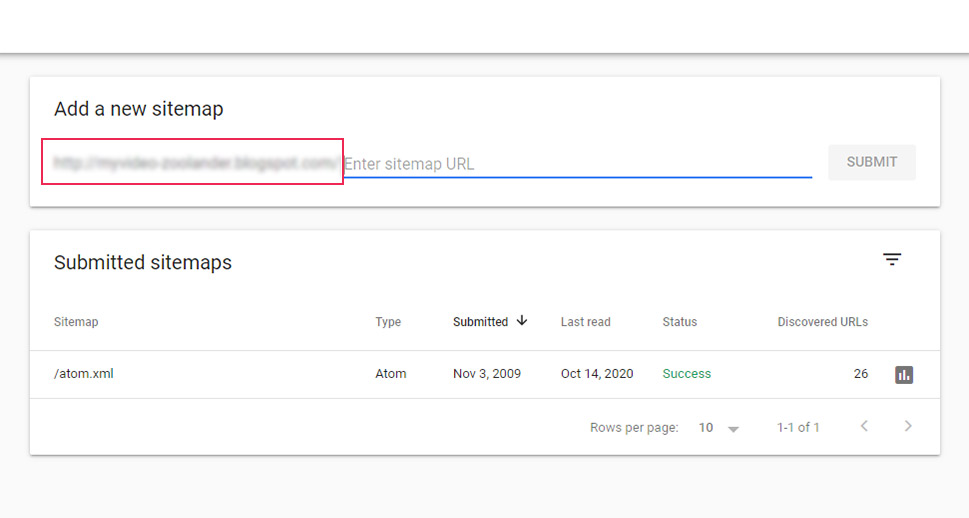

To have Google recrawl your website, or in general if you want it to recrawl many URLs at the same time, you need to submit a sitemap to Google. First, however, you need to create a sitemap, which is where having a plugin such as Yoast SEO comes in handy. Navigate to SEO > General, and then find the XML Sitemaps option.

Make sure it’s set to “on”, click on the question mark beside it, press the hyperlinked text “see the sitemap,” and you’ll have access to your website’s sitemap. Once you have it, you can head over to Google Search Console, and add the website if you haven’t already.

The Sitemaps Tab is where you want to go – it will allow you to enter the URL to your sitemap. If you’ve done this successfully, you’ll see the sitemap appear on the list below. With that done, Google should have no trouble crawling your website.

Alternatively, you can go to robots.txt and specify the path to your sitemap. It should look something like this:

Sitemap: http://example.com/my_sitemap.xml

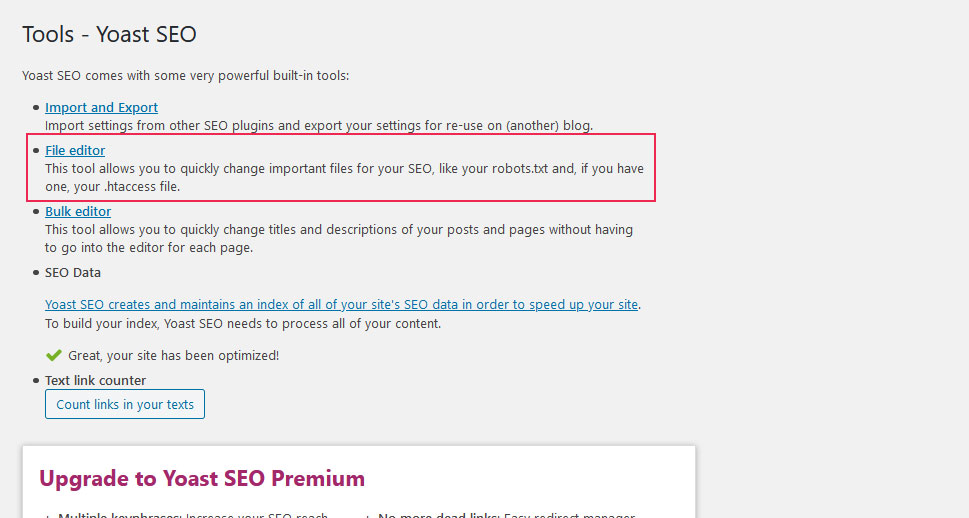

To access the robots.txt file on your website, you can either use an FTP client, or you can rely on Yoast SEO again. Navigate to SEO > Tools from your website’s backend, and then use the File editor to access it.

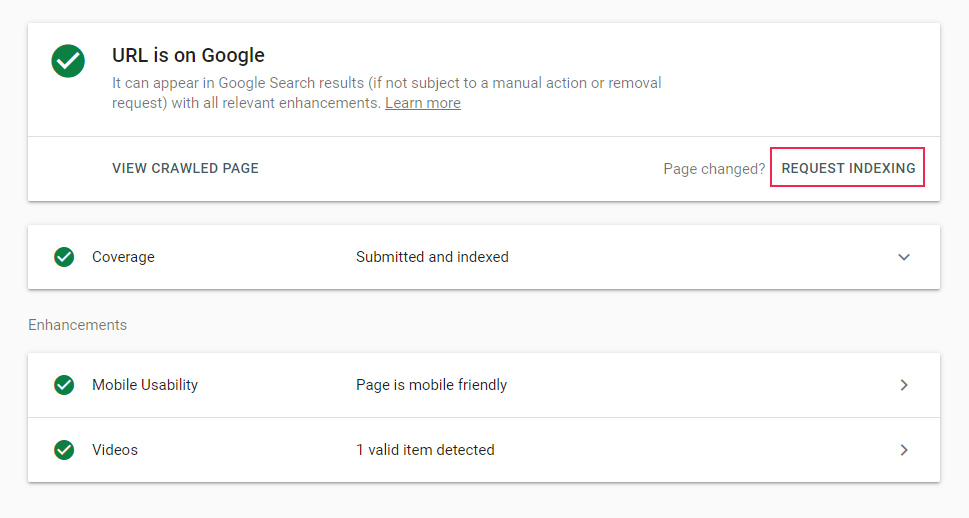

In some cases, you’ll want Google to pay special attention to only a couple of links. The proper way to handle this would be to use an URL Inspection tool on the URLs you want to inspect. The tool will let you request a recrawl but remember – if you have more than a couple of URLs that need recrawling, you should submit a sitemap instead.

Requesting a recrawl of an individual URL is as simple as inspecting it with the URL Inspection tool in Google Search Console and selecting the Request Indexing option. In case the page doesn’t have obvious issues that will prevent it from indexing – something you’ll know right away – the page will be added to the indexing queue.

Let’s Wrap It Up!

Your website’s visibility in search engines depends on the engine’s crawlers making an inventory of the content of your website. In case you’ve added new parts of the website, changed some old ones, or you’re looking to come out of the dark and let people find your website using search engines, you have two options.

Submitting a sitemap is a sure way to have your whole website indexed, and you should use it if you have more than just a couple of URLs that need to be indexed. Otherwise, you should use the URL Inspection tool to pinpoint the URLs that need reindexing and let Google know it should do it.